Continual Learning for Anomaly based Network Intrusion Detection

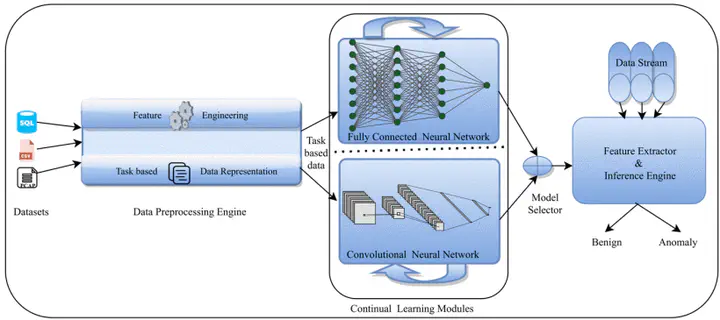

Proposed A-NIDS Design using Continual Learning

Proposed A-NIDS Design using Continual Learning

Abstract

To defend computing systems against ever-growing cyber attacks, Anomaly-based Network Intrusion Detection Systems (A-NIDS) have to evolve continuously. This requirement renders classical machine learning algorithms ineffective since they do not handle sequentially evolving tasks gracefully. Specifically, neural networks (NNs) are prone to catastrophic forgetting (CF) when trained on sequential data. Recent advances in addressing this drawback of NNs have resulted in a paradigm called Continual Learning (CL) which mitigates CF by introducing suitable constraints during the sequential training of these NNs. CL has been shown to be very effective in improving the performance of NNs on computer vision tasks. However, its application to the design of A-NIDS has not been explored. In this work, we evaluate the suitability of CL to address the challenges posed in A-NIDS design. Unlike computer vision datasets, network datasets suffer from the Class Imbalance (CI) problem, which makes the direct application of CL algorithms challenging. To evaluate the suitability of CL algorithms on network datasets, we study the impact of class imbalance on task ordering and its effect on the design of CL- based A-NIDS in the Class Incremental (CIL) and Domain Incremental (DIL) learning settings. Towards this end, we apply two popular CL algorithms viz. Elastic Weight Consolidation (EWC) and Gradient Episodic Memory (GEM) on two datasets viz., CICIDS and KDD Cup'99, and evaluate their performance. We found that CI affects task order sensitivity to a greater extent in the CIL setting when compared to the DIL setting. The performance of DIL setting can be further enhanced by incorporating experience forgetting aware memory population techniques, and we recommend this as a practical approach to building CL-based A-NIDS.